what is

"Apache Spark™ is a fast and general engine for large-scale data processing....Run programs up to 100x faster than Hadoop MapReduce in memory, or 10x faster on disk." stated in apache spark

in despite of it's real a fact or not, i think certain key concepts/components to support these points of view:

a.use Resilient Distributed Datasets(RDD) program modeling largely differs from common ideas,eg. mapreduce.spark uses many optimized algorithms(e.g. iterative,localization etc) spread workload to across many workers in cluster.specially in reuse of data computation.

RDD:A resilient distributed dataset (RDD) is a read-only col- lection of objects partitioned across a set of machines that can be rebuilt if a partition is lost.[1]

b.uses memory as far as possible.most of the intermediate results from spark retains in memory other than disks,so it's needles suffer from the io problem and serial-deserial cases.

in fact we use many tools to do similar stuffs ,like memocache,redis..

c.emphasizes the parallism concept.

d.degrades the jvm supervior responsibilities.eg. use one executor to hold on certain tasks instead of one container per task in yarn.

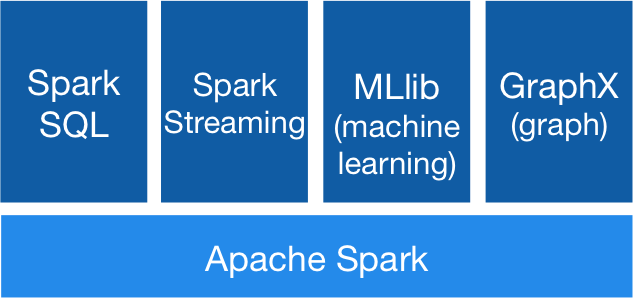

architecture

(the core component is as a platform for other components)

usages of spark

1.iterative alogrithms.eg. machine learning,clustering..

2.interactive analystics. eg. query a ton of data loaded from disk to memory to reduce the latency of io

3.batch process

program language

most of the source code are writing with scala( i think many functions,ideas are inspirated from scala;),but u can also write with java,python in it

flex integrations

many popular frameworks are supported by spark,e.g. hadoop,hbase,mesos etc

ref:

[1] some papers

相关推荐

SAML技术。主要用于单点登录、认证等场景。

Spark Overview Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala and Python, and an optimized engine that supports general execution ...

ASAM_XCP_Part1-Overview_V1-1-0

Appendix-00-Overview-of-Java.pptxAppendix-00-Overview-of-Java.pptxAppendix-00-Overview-of-Java.pptxAppendix-00-Overview-of-Java.pptxAppendix-00-Overview-of-Java.pptxAppendix-00-Overview-of-Java.pptx

Overview ===================================== This is the full sources of NetFilter SDK 2.0 + ProtocolFilters. Package contents ===================================== bin\Release - x86 and x64 ...

ipp-signal-processing-overview-2010-q1.pdf

ARM-1-overview-2010-06-20

There is no better time to learn Spark than...This chapter provides a high-level overview of Spark, including the core concepts, the architecture, and the various components inside the Apache Spark stack

生成对抗网络综述,全中文的,在网上找的 内容有点多

sap-overview-training

Berkeley-Data-Analytics-Stack-BDAS-Overview-Ion-Stoica-Strata-2013.pdf

125521_Solaris1-Overview-processmodel-mdb-dtrace.pdf

Xiao Li and Wenchen Fan offer an overview of the major features and enhancements in Apache Spark 2.4. Along the way, you’ll learn about the design and implementation of V2 of theData Source API and ...

Mobileye-Investor-Overview-Presentation

maven-overview-plugin-1.6.jar

maven-overview-plugin-1.5.jar

maven-overview-plugin-1.4.jar

maven-overview-plugin-1.3.jar

Mobileye-Investor-Overview-Presentation.zip